Russ Allbery: Review: Thud!

| Series: | Discworld #34 |

| Publisher: | Harper |

| Copyright: | October 2005 |

| Printing: | November 2014 |

| ISBN: | 0-06-233498-0 |

| Format: | Mass market |

| Pages: | 434 |

| Series: | Discworld #34 |

| Publisher: | Harper |

| Copyright: | October 2005 |

| Printing: | November 2014 |

| ISBN: | 0-06-233498-0 |

| Format: | Mass market |

| Pages: | 434 |

It is more important to recognize what direction people are moving than where they are.I support trains and transit. I ve donated money and written letters to politicians. But, realistically, there will never be transit here. People in my county are unable to move all the way to transit. But what can we do? Plenty. We bought an EV. I ve been writing letters to the board of our local electrical co-op advocating for relaxation of rules around residential solar installations, and am planning one myself. It may well be that our solar-powered transportation winds up having a lower carbon footprint than the poster s transit use. Pick your favorite cause. Whatever it is, consider your strategy: What do you do with someone that is very far away from you, but has taken the first step to move an inch in your direction? Do you yell at them for not being there instantly? Or do you celebrate that they have changed and are moving?

Until now, I haven't hacked my Kobo Libra 2 ereader, despite knowing it

is a relatively open device. The default document reader (Nickel) does

everything I need it to. Syncing the books via USB is tedious, but I don't do

it that often.

Via Videah's blog post My E-Reader

Setup, I learned of

Plato, an alternative document reader.

Plato doesn't really offer any headline features that I need, but it cost

me nothing to try it out, so I installed it (fairly painlessly) and launched

it just once. The library view seems good, although I've not used it much:

I picked a book and read it through1, and I'm 60% through another2. I tend to

read one ebook at a time.

The main reader interface is great: Just the text3.

Page transitions are really, really fast. Tweaking the backlight intensity

is a little slower than Nickel: menu-driven rather than an active scroll

region (which is convenient in Nickel but easy to accidentally turn to 0%

and hard to recover from in pitch black).

Now that I've started down the road of hacking the Kobo, I think I will explore

wifi-syncing the library, perhaps using a variation on the hook scripts shared

in Videah's blog post.

Until now, I haven't hacked my Kobo Libra 2 ereader, despite knowing it

is a relatively open device. The default document reader (Nickel) does

everything I need it to. Syncing the books via USB is tedious, but I don't do

it that often.

Via Videah's blog post My E-Reader

Setup, I learned of

Plato, an alternative document reader.

Plato doesn't really offer any headline features that I need, but it cost

me nothing to try it out, so I installed it (fairly painlessly) and launched

it just once. The library view seems good, although I've not used it much:

I picked a book and read it through1, and I'm 60% through another2. I tend to

read one ebook at a time.

The main reader interface is great: Just the text3.

Page transitions are really, really fast. Tweaking the backlight intensity

is a little slower than Nickel: menu-driven rather than an active scroll

region (which is convenient in Nickel but easy to accidentally turn to 0%

and hard to recover from in pitch black).

Now that I've started down the road of hacking the Kobo, I think I will explore

wifi-syncing the library, perhaps using a variation on the hook scripts shared

in Videah's blog post.

region=CH means that date and number formats are also changed

to CH version, e.g. one thousand + is displayed as 1'000,50.en_US locale, with

the above number shown/formatted as 1,000.50; well, it s more

about parsing than formatting, but that s irrelevant.% defaults read .GlobalPreferences grep en_

AKLastLocale = "en_CH";

AppleLocale = "en_CH";

% defaults read -app FooBar

(has no AppleLocale key)

% defaults write -app FooBar AppleLocale en_USdefaults man page says the global-global is

NSGlobalDomain, I don t know where I got the

.GlobalPreferences. But I only needed to know the key name (in this

case, AppleLocale - of course it couldn t be LC_ALL/LANG).

One day I ll know MacOS better, but I try to learn more for 2+ years

now, and it s not a smooth ride. Old dog new tricks, right?

| Series: | Fall Revolution #3 |

| Publisher: | Tor |

| Copyright: | 1998 |

| Printing: | August 2000 |

| ISBN: | 0-8125-6858-3 |

| Format: | Mass market |

| Pages: | 305 |

Life is a process of breaking down and using other matter, and if need be, other life. Therefore, life is aggression, and successful life is successful aggression. Life is the scum of matter, and people are the scum of life. There is nothing but matter, forces, space and time, which together make power. Nothing matters, except what matters to you. Might makes right, and power makes freedom. You are free to do whatever is in your power, and if you want to survive and thrive you had better do whatever is in your interests. If your interests conflict with those of others, let the others pit their power against yours, everyone for theirselves. If your interests coincide with those of others, let them work together with you, and against the rest. We are what we eat, and we eat everything. All that you really value, and the goodness and truth and beauty of life, have their roots in this apparently barren soil. This is the true knowledge. We had founded our idealism on the most nihilistic implications of science, our socialism on crass self-interest, our peace on our capacity for mutual destruction, and our liberty on determinism. We had replaced morality with convention, bravery with safety, frugality with plenty, philosophy with science, stoicism with anaesthetics and piety with immortality. The universal acid of the true knowledge had burned away a world of words, and exposed a universe of things. Things we could use.This is certainly something that some people will believe, particularly cynical college students who love political theory, feeling smarter than other people, and calling their pet theories things like "the true knowledge." It is not even remotely believable as the governing philosophy of a solar confederation. The point of government for the average person in human society is to create and enforce predictable mutual rules that one can use as a basis for planning and habits, allowing you to not think about politics all the time. People who adore thinking about politics have great difficulty understanding how important it is to everyone else to have ignorable government. Constantly testing your power against other coalitions is a sport, not a governing philosophy. Given the implication that this testing is through violence or the threat of violence, it beggars belief that any large number of people would tolerate that type of instability for an extended period of time. Ellen is fully committed to the true knowledge. MacLeod likely is not; I don't think this represents the philosophy of the author. But the primary political conflict in this novel famous for being political science fiction is between the above variation of anarchy and an anarchocapitalist society, neither of which are believable as stable political systems for large numbers of people. This is a bit like seeking out a series because you were told it was about a great clash of European monarchies and discovering it was about a fight between Liberland and Sealand. It becomes hard to take the rest of the book seriously. I do realize that one point of political science fiction is to play with strange political ideas, similar to how science fiction plays with often-implausible science ideas. But those ideas need some contact with human nature. If you're going to tell me that the key to clawing society back from a world-wide catastrophic descent into chaos is to discard literally every social system used to create predictability and order, you had better be describing aliens, because that's not how humans work. The rest of the book is better. I am untangling a lot of backstory for the above synopsis, which in the book comes in dribs and drabs, but piecing that together is good fun. The plot is far more straightforward than the previous two books in the series: there is a clear enemy, a clear goal, and Ellen goes from point A to point B in a comprehensible way with enough twists to keep it interesting. The core moral conflict of the book is that Ellen is an anti-AI fanatic to the point that she considers anyone other than non-uploaded humans to be an existential threat. MacLeod gives the reader both reasons to believe Ellen is right and reasons to believe she's wrong, which maintains an interesting moral tension. One thing that MacLeod is very good at is what Bob Shaw called "wee thinky bits." I think my favorite in this book is the computer technology used by the Cassini Division, who have spent a century in close combat with inimical AI capable of infecting any digital computer system with tailored viruses. As a result, their computers are mechanical non-Von-Neumann machines, but mechanical with all the technology of a highly-advanced 24th century civilization with nanometer-scale manufacturing technology. It's a great mental image and a lot of fun to think about. This is the only science fiction novel that I can think of that has a hard-takeoff singularity that nonetheless is successfully resisted and fought to a stand-still by unmodified humanity. Most writers who were interested in the singularity idea treated it as either a near-total transformation leaving only remnants or as something that had to be stopped before it started. MacLeod realizes that there's no reason to believe a post-singularity form of life would be either uniform in intent or free from its own baffling sudden collapses and reversals, which can be exploited by humans. It makes for a much better story. The sociology of this book is difficult to swallow, but the characterization is significantly better than the previous books of the series and the plot is much tighter. I was too annoyed by the political science to fully enjoy it, but that may be partly the fault of my expectations coming in. If you like chewy, idea-filled science fiction with a lot of unexplained world-building that you have to puzzle out as you go, you may enjoy this, although unfortunately I think you need to read at least The Stone Canal first. The ending was a bit unsatisfying, but even that includes some neat science fiction ideas. Followed by The Sky Road, although I understand it is not a straightforward sequel. Rating: 6 out of 10

| Series: | October Daye #14 |

| Publisher: | DAW |

| Copyright: | 2020 |

| ISBN: | 0-7564-1253-6 |

| Format: | Kindle |

| Pages: | 351 |

The new 7945HX CPU from AMD is currently the most powerful. I d love to have one of them, to replace the now aging 6 core Xeon that I ve been using for more than 5 years. So, I ve been searching for a laptop with that CPU.

Absolutely all of the laptops I found with this CPU also embed a very powerful RTX 40 0 series GPU, that I have no use: I don t play games, and I don t do AI. I just want something that builds Debian packages fast (like Ceph, that takes more than 1h to build for me ). The more cores I get, the faster all OpenStack unit tests are running too (stestr does a moderately good job at spreading the tests to all cores). That d be ok if I had to pay more for a GPU that I don t need, and I would have deal with the annoyance of the NVidia driver, if only I could find something with a correct size. But I can only find 16 or bigger laptops, that wont fit in my scooter back case (most of the time, these laptops have an 17 inch screen: that s a way too big).

Currently, I found:

The new 7945HX CPU from AMD is currently the most powerful. I d love to have one of them, to replace the now aging 6 core Xeon that I ve been using for more than 5 years. So, I ve been searching for a laptop with that CPU.

Absolutely all of the laptops I found with this CPU also embed a very powerful RTX 40 0 series GPU, that I have no use: I don t play games, and I don t do AI. I just want something that builds Debian packages fast (like Ceph, that takes more than 1h to build for me ). The more cores I get, the faster all OpenStack unit tests are running too (stestr does a moderately good job at spreading the tests to all cores). That d be ok if I had to pay more for a GPU that I don t need, and I would have deal with the annoyance of the NVidia driver, if only I could find something with a correct size. But I can only find 16 or bigger laptops, that wont fit in my scooter back case (most of the time, these laptops have an 17 inch screen: that s a way too big).

Currently, I found:

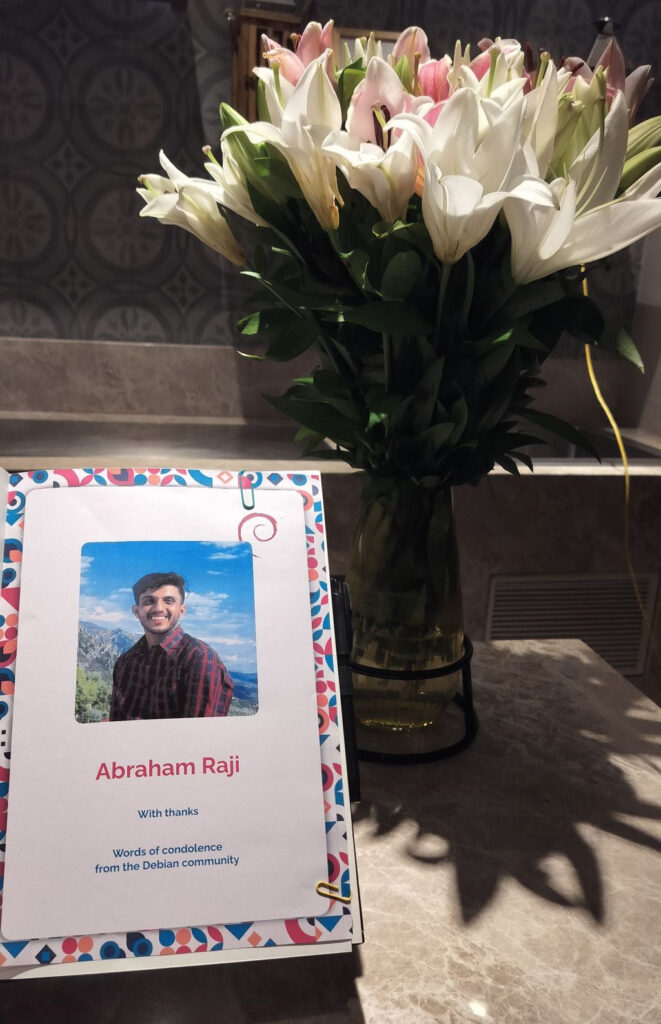

Man, you re no longer with us, but I am touched by the number of people you have positively impacted. Almost every DebConf23 presentations by locals I saw after you, carried how you were instrumental in bringing them there. How you were a dear friend and brother.

It s a weird turn of events, that you left us during one thing we deeply cared and worked towards making possible since the last 3 years together. Who would have known, that Sahil, I m going back to my apartment tonight and casual bye post that would be the last conversation we ever had.

Things were terrible after I heard the news. I had a hard time convincing myself to come see you one last time during your funeral. That was the last time I was going to get to see you, and I kept on looking at you. You, there in front of me, all calm, gave me peace. I ll carry that image all my life now. Your smile will always remain with me.

Now, who ll meet and receive me on the door at almost every Debian event (just by sheer co-incidence?). Who ll help me speak out loud about all the Debian shortcomings (and then discuss solutions, when sober :)).

Man, you re no longer with us, but I am touched by the number of people you have positively impacted. Almost every DebConf23 presentations by locals I saw after you, carried how you were instrumental in bringing them there. How you were a dear friend and brother.

It s a weird turn of events, that you left us during one thing we deeply cared and worked towards making possible since the last 3 years together. Who would have known, that Sahil, I m going back to my apartment tonight and casual bye post that would be the last conversation we ever had.

Things were terrible after I heard the news. I had a hard time convincing myself to come see you one last time during your funeral. That was the last time I was going to get to see you, and I kept on looking at you. You, there in front of me, all calm, gave me peace. I ll carry that image all my life now. Your smile will always remain with me.

Now, who ll meet and receive me on the door at almost every Debian event (just by sheer co-incidence?). Who ll help me speak out loud about all the Debian shortcomings (and then discuss solutions, when sober :)).

It was a testament of the amount of time we had already spent together online, that when we first met during MDC Palakkad, it didn t feel we were physically meeting for the first time. The conversations just flowed.

Now this song is associated with you due to your speech during post MiniDebConf Palakkad dinner. Hearing it reminds me of all the times we spent together chilling and talking community (which you cared deeply about). I guess, now we can t stop caring for the community, because your energy was contagious.

Now, I can t directly dial your number to listen - Hey Sahil! What s up? from the other end, or Tell me, tell me on any mention of the problem. Nor would I be able to send reference usage of your Debian packaging guide in the wild. You already know how popular this guide of yours. How many people that guide has helped with getting started with packaging. Our last telegram text was me telling you about guide usage in Ravi s DebConf23 presentation. Did I ever tell you, I too got my first start with packaging from there. I started looking up to you from there, even before we met or talked. Now, I missed telling you, I was probably your biggest fan whenever you had the mic in hand and started speaking. You always surprised me all the insights and idea you brought and would kept on impressing me for someone who was just my age but was way more mature.

Reading recent toots from Raju Dev made me realize how much I loved your writings. You wrote

How the Future will remember Us , Doing what s right and many more. The level of depth in your thought was unparalleled. I loved reading those. That s why I kept pestering you to write more, which you slowly stopped. Now I fully understand why though. You were busy; really busy helping people out or just working for making things better. You were doing Debian, upstream projects, web development, designs, graphics, mentoring, free software evangelism while being the go-to person for almost everyone around. Everyone depended on you, because you were too kind to turn down anyone.

It was a testament of the amount of time we had already spent together online, that when we first met during MDC Palakkad, it didn t feel we were physically meeting for the first time. The conversations just flowed.

Now this song is associated with you due to your speech during post MiniDebConf Palakkad dinner. Hearing it reminds me of all the times we spent together chilling and talking community (which you cared deeply about). I guess, now we can t stop caring for the community, because your energy was contagious.

Now, I can t directly dial your number to listen - Hey Sahil! What s up? from the other end, or Tell me, tell me on any mention of the problem. Nor would I be able to send reference usage of your Debian packaging guide in the wild. You already know how popular this guide of yours. How many people that guide has helped with getting started with packaging. Our last telegram text was me telling you about guide usage in Ravi s DebConf23 presentation. Did I ever tell you, I too got my first start with packaging from there. I started looking up to you from there, even before we met or talked. Now, I missed telling you, I was probably your biggest fan whenever you had the mic in hand and started speaking. You always surprised me all the insights and idea you brought and would kept on impressing me for someone who was just my age but was way more mature.

Reading recent toots from Raju Dev made me realize how much I loved your writings. You wrote

How the Future will remember Us , Doing what s right and many more. The level of depth in your thought was unparalleled. I loved reading those. That s why I kept pestering you to write more, which you slowly stopped. Now I fully understand why though. You were busy; really busy helping people out or just working for making things better. You were doing Debian, upstream projects, web development, designs, graphics, mentoring, free software evangelism while being the go-to person for almost everyone around. Everyone depended on you, because you were too kind to turn down anyone.

Man, I still get your spelling wrong :) Did I ever tell you that? That was the reason, I used to use AR instead online.

You ll be missed and will always be part of our conversations, because you have left a profound impact on me, our friends, Debian India and everyone around. See you! the coolest man around.

In memory:

Man, I still get your spelling wrong :) Did I ever tell you that? That was the reason, I used to use AR instead online.

You ll be missed and will always be part of our conversations, because you have left a profound impact on me, our friends, Debian India and everyone around. See you! the coolest man around.

In memory:

This post describes how to define and use rule templates with semantic names using

This post describes how to define and use rule templates with semantic names using extends or !reference tags, how

to define manual jobs using the same templates and how to use gitlab-ci

inputs as macros to give names to regular expressions used by rules.

rules.yml file stored on a common repository used from different projects as I mentioned on

my previous post, but they can be defined anywhere, the important thing is that the

files that need them include their definition somehow.

The first version of my rules.yml file was as follows:

.rules_common:

# Common rules; we include them from others instead of forcing a workflow

rules:

# Disable branch pipelines while there is an open merge request from it

- if: >-

$CI_COMMIT_BRANCH &&

$CI_OPEN_MERGE_REQUESTS &&

$CI_PIPELINE_SOURCE != "merge_request_event"

when: never

.rules_default:

# Default rules, we need to add the when: on_success to make things work

rules:

- !reference [.rules_common, rules]

- when: on_success.rules_common defines a rule section to disable jobs as we can do on a workflow definition;

in our case common rules only have if rules that apply to all jobs and are used to disable them. The example includes

one that avoids creating duplicated jobs when we push to a branch that is the source of an open MR as explained

here.

To use the rules in a job we have two options, use the extends keyword (we do that when we want to use the rule as is)

or declare a rules section and add a !reference to the template we want to use as described

here (we do that when we want to add

additional rules to disable a job before evaluating the template conditions).

As an example, with the following definitions both jobs use the same rules:

job_1:

extends:

- .rules_default

[...]

job_2:

rules:

- !reference [.rules_default, rules]

[...]when: manual and defines if we

want it to be optional or not (allow_failure: true makes the job optional, if we don t add that to the rule the job is

blocking) or add the when: manual and the allow_failure value to the job (if we work at the job level the default

value for allow_failure is false for when: manual, so it is optional by default, we have to add an explicit

allow_failure = true it to make it blocking).

The following example shows how we define blocking or optional manual jobs using rules with when conditions:

.rules_default_manual_blocking:

# Default rules for optional manual jobs

rules:

- !reference [.rules_common, rules]

- when: manual

# allow_failure: false is implicit

.rules_default_manual_optional:

# Default rules for optional manual jobs

rules:

- !reference [.rules_common, rules]

- when: manual

allow_failure: true

manual_blocking_job:

extends:

- .rules_default_manual_blocking

[...]

manual_optional_job:

extends:

- .rules_default_manual_optional

[...]manual_blocking_job:

extends:

- .rules_default

when: manual

allow_failure: false

[...]

manual_optional_job:

extends:

- .rules_default

when: manual

# allow_failure: true is implicit

[...]rules.yml file smaller and I see that the

job is manual in its definition without problem.allow_failure, changes, exists, needs or variablesUnluckily for us, for now there is no way to avoid creating additional templates as we did on the when: manual case

when a rule is similar to an existing one but adds changes,

exists,

needs or

variables to it.

So, for now, if a rule needs to add any of those fields we have to copy the original rule and add the keyword section.

Some notes, though:

allow_failure if we want to change its value for a given condition, in other cases we can set

the value at the job level.changes to the rule it is important to make sure that they are going to be evaluated as explained

here.needs value to a rule for a specific condition and it matches it replaces the job needs section;

when using templates I would use two different job names with different conditions instead of adding a needs on a

single job.main branch and use

short-lived branches to test and complete changes before pushing things to main.

Using this approach we can define an initial set of rule templates with semantic names:

.rules_mr_to_main:

rules:

- !reference [.rules_common, rules]

- if: $CI_MERGE_REQUEST_TARGET_BRANCH_NAME == 'main'

.rules_mr_or_push_to_main:

rules:

- !reference [.rules_common, rules]

- if: $CI_MERGE_REQUEST_TARGET_BRANCH_NAME == 'main'

- if: >-

$CI_COMMIT_BRANCH == 'main'

&&

$CI_PIPELINE_SOURCE != 'merge_request_event'

.rules_push_to_main:

rules:

- !reference [.rules_common, rules]

- if: >-

$CI_COMMIT_BRANCH == 'main'

&&

$CI_PIPELINE_SOURCE != 'merge_request_event'

.rules_push_to_branch:

rules:

- !reference [.rules_common, rules]

- if: >-

$CI_COMMIT_BRANCH != 'main'

&&

$CI_PIPELINE_SOURCE != 'merge_request_event'

.rules_push_to_branch_or_mr_to_main:

rules:

- !reference [.rules_push_to_branch, rules]

- if: >-

$CI_MERGE_REQUEST_SOURCE_BRANCH_NAME != 'main'

&&

$CI_MERGE_REQUEST_TARGET_BRANCH_NAME == 'main'

.rules_release_tag:

rules:

- !reference [.rules_common, rules]

- if: $CI_COMMIT_TAG =~ /^([0-9a-zA-Z_.-]+-)?v\d+.\d+.\d+$/

.rules_non_release_tag:

rules:

- !reference [.rules_common, rules]

- if: $CI_COMMIT_TAG !~ /^([0-9a-zA-Z_.-]+-)?v\d+.\d+.\d+$/inputs as macrosOn the previous rules we have used a regular expression to identify the release tag format and assumed that the

general branches are the ones with a name different than main; if we want to force a format for those branch names

we can replace the condition != 'main' by a regex comparison (=~ if we look for matches, !~ if we want to define

valid branch names removing the invalid ones).

When testing the new gitlab-ci inputs my colleague Jorge noticed that

if you keep their default value they basically work as macros.

The variables declared as inputs can t hold YAML values, the truth is that their value is always a string that is

replaced by the value assigned to them when including the file (if given) or by their default value, if defined.

If you don t assign a value to an input variable when including the file that declares it its occurrences are replaced

by its default value, making them work basically as macros; this is useful for us when working with strings that can t

managed as variables, like the regular expressions used inside if conditions.

With those two ideas we can add the following prefix to the rules.yaml defining inputs for both regular expressions

and replace the rules that can use them by the ones shown here:

spec:

inputs:

# Regular expression for branches; the prefix matches the type of changes

# we plan to work on inside the branch (we use conventional commit types as

# the branch prefix)

branch_regex:

default: '/^(build ci chore docs feat fix perf refactor style test)\/.+$/'

# Regular expression for tags

release_tag_regex:

default: '/^([0-9a-zA-Z_.-]+-)?v\d+.\d+.\d+$/'

---

[...]

.rules_push_to_changes_branch:

rules:

- !reference [.rules_common, rules]

- if: >-

$CI_COMMIT_BRANCH =~ $[[ inputs.branch_regex ]]

&&

$CI_PIPELINE_SOURCE != 'merge_request_event'

.rules_push_to_branch_or_mr_to_main:

rules:

- !reference [.rules_push_to_branch, rules]

- if: >-

$CI_MERGE_REQUEST_SOURCE_BRANCH_NAME =~ $[[ inputs.branch_regex ]]

&&

$CI_MERGE_REQUEST_TARGET_BRANCH_NAME == 'main'

.rules_release_tag:

rules:

- !reference [.rules_common, rules]

- if: $CI_COMMIT_TAG =~ $[[ inputs.release_tag_regex ]]

.rules_non_release_tag:

rules:

- !reference [.rules_common, rules]

- if: $CI_COMMIT_TAG !~ $[[ inputs.release_tag_regex ]]!reference tags to fine tune rules when we need to add conditions to disable them

simply adding conditions with when: never before referencing the template.

As an example, in some projects I m using different job definitions depending on the DEPLOY_ENVIRONMENT value to make

the job manual or automatic; as we just said we can define different jobs referencing the same rule adding a condition

to check if the environment is the one we are interested in:

deploy_job_auto:

rules:

# Only deploy automatically if the environment is 'dev' by skipping this job

# for other values of the DEPLOY_ENVIRONMENT variable

- if: $DEPLOY_ENVIRONMENT != "dev"

when: never

- !reference [.rules_release_tag, rules]

[...]

deploy_job_manually:

rules:

# Disable this job if the environment is 'dev'

- if: $DEPLOY_ENVIRONMENT == "dev"

when: never

- !reference [.rules_release_tag, rules]

when: manual

# Change this to false to make the deployment job blocking

allow_failure: true

[...].rules_common template; we add

conditions to disable the job before evaluating the real rules.

The difference in that case is that we reference them at the beginning because we want those negative conditions on all

jobs and that is also why we have a .rules_default condition with an when: on_success for the jobs that only need to

respect the default workflow (we need the last condition to make sure that they are executed if the negative rules don t

match). I very, very nearly didn t make it to DebConf this year, I had a bad cold/flu for a few days before I left, and after a negative covid-19 test just minutes before my flight, I decided to take the plunge and travel.

This is just everything in chronological order, more or less, it s the only way I could write it.

I very, very nearly didn t make it to DebConf this year, I had a bad cold/flu for a few days before I left, and after a negative covid-19 test just minutes before my flight, I decided to take the plunge and travel.

This is just everything in chronological order, more or less, it s the only way I could write it.

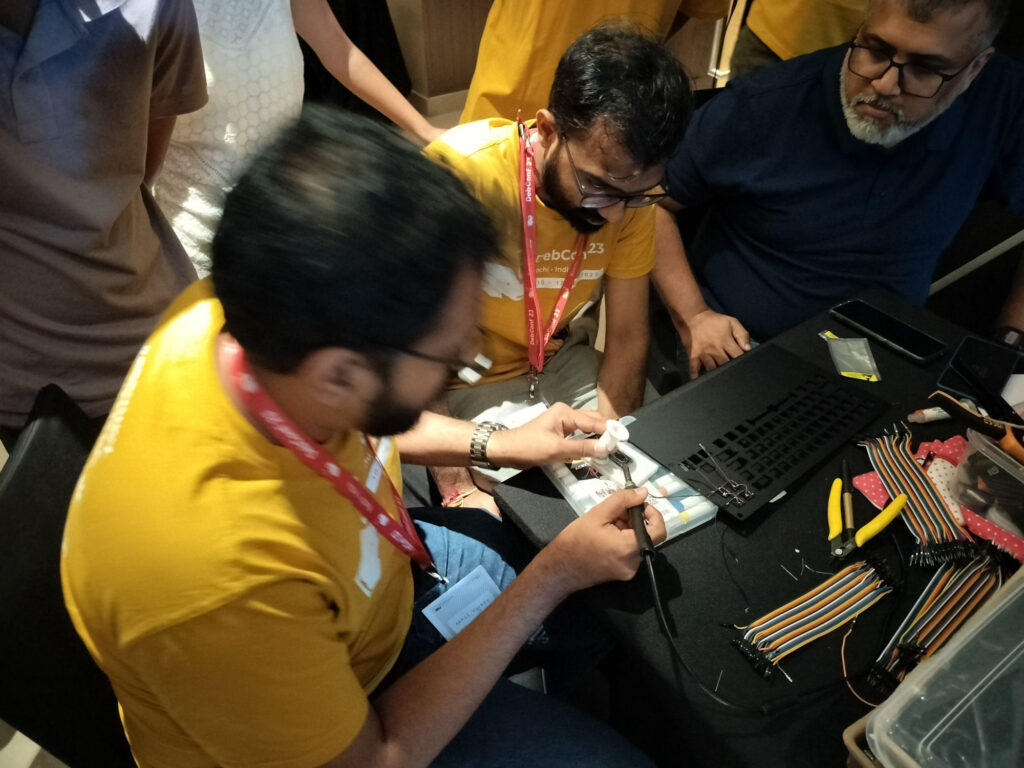

If you got one of these Cheese & Wine bags from DebConf, that s from the South African local group!

If you got one of these Cheese & Wine bags from DebConf, that s from the South African local group! Some hopefully harmless soldering.

Some hopefully harmless soldering.

This post describes how to handle files that are used as assets by jobs and pipelines defined on a common gitlab-ci

repository when we include those definitions from a different project.

This post describes how to handle files that are used as assets by jobs and pipelines defined on a common gitlab-ci

repository when we include those definitions from a different project.

.giltlab-ci.yml file includes files from a different

repository its contents are expanded and the resulting code is the same as the one generated when the included files

are local to the repository.

In fact, even when the remote files include other files everything works right, as they are also expanded (see the

description of how included files are merged

for a complete explanation), allowing us to organise the common repository as we want.

As an example, suppose that we have the following script on the assets/ folder of the common repository:

dumb.sh

#!/bin/sh

echo "The script arguments are: '$@'"job:

script:

- $CI_PROJECT_DIR/assets/dumb.sh ARG1 ARG2The script arguments are: 'ARG1 ARG2'/scripts-23-19051/step_script: eval: line 138: d./assets/dumb.sh: not foundYAML files, but if a script wants to use other files from the

common repository as an asset (configuration file, shell script, template, etc.), the execution fails if the files are

not available on the project that includes the remote job definition.base64 or something similar, making maintenance harder.

As an example, imagine that we want to use the dumb.sh script presented on the previous section and we want to call it

from the same PATH of the main project (on the examples we are using the same folder, in practice we can create a hidden

folder inside the project directory or use a PATH like /tmp/assets-$CI_JOB_ID to leave things outside the project

folder and make sure that there will be no collisions if two jobs are executed on the same place (i.e. when using a ssh

runner).

To create the file we will use hidden jobs to write our script

template and reference tags to add it to the

scripts when we want to use them.

Here we have a snippet that creates the file with cat:

.file_scripts:

create_dumb_sh:

-

# Create dumb.sh script

mkdir -p "$ CI_PROJECT_DIR /assets"

cat >"$ CI_PROJECT_DIR /assets/dumb.sh" <<EOF

#!/bin/sh

echo "The script arguments are: '\$@'"

EOF

chmod +x "$ CI_PROJECT_DIR /assets/dumb.sh"base64 we replace the previous snippet by this:

.file_scripts:

create_dumb_sh:

-

# Create dumb.sh script

mkdir -p "$ CI_PROJECT_DIR /assets"

base64 -d >"$ CI_PROJECT_DIR /assets/dumb.sh" <<EOF

IyEvYmluL3NoCmVjaG8gIlRoZSBzY3JpcHQgYXJndW1lbnRzIGFyZTogJyRAJyIK

EOF

chmod +x "$ CI_PROJECT_DIR /assets/dumb.sh"base64 version of the file using 6 spaces (all lines of the base64 output have to be

indented) and to make changes we have to decode and re-code the file manually, making it harder to maintain.

With either version we just need to add a !reference before using the script, if we add the call on the first lines of

the before_script we can use the downloaded file in the before_script, script or after_script sections of the

job without problems:

job:

before_script:

- !reference [.file_scripts, create_dumb_sh]

script:

- $ CI_PROJECT_DIR /assets/dumb.sh ARG1 ARG2The script arguments are: 'ARG1 ARG2'assets) and prepare a YAML file that declares some variables (i.e. the URL of the templates project and the PATH where

we want to download the files) and defines a script fragment to download the complete folder.

Once we have the YAML file we just need to include it and add a reference to the script fragment at the beginning of the

before_script of the jobs that use files from the assets directory and they will be available when needed.

The following file is an example of the YAML file we just mentioned:

variables:

CI_TMPL_API_V4_URL: "$ CI_API_V4_URL /projects/common%2Fci-templates"

CI_TMPL_ARCHIVE_URL: "$ CI_TMPL_API_V4_URL /repository/archive"

CI_TMPL_ASSETS_DIR: "/tmp/assets-$ CI_JOB_ID "

.scripts_common:

bootstrap_ci_templates:

-

# Downloading assets

echo "Downloading assets"

mkdir -p "$CI_TMPL_ASSETS_DIR"

wget -q -O - --header="PRIVATE-TOKEN: $CI_TMPL_READ_TOKEN" \

"$CI_TMPL_ARCHIVE_URL?path=assets&sha=$ CI_TMPL_REF:-main "

tar --strip-components 2 -C "$ASSETS_DIR" -xzf -CI_TMPL_API_V4_URL: URL of the common project, in our case we are using the project ci-templates inside the

common group (note that the slash between the group and the project is escaped, that is needed to reference the

project by name, if we don t like that approach we can replace the url encoded path by the project id, i.e. we could

use a value like $ CI_API_V4_URL /projects/31)CI_TMPL_ARCHIVE_URL: Base URL to use the gitlab API to download files from a repository, we will add the arguments

path and sha to select which sub path to download and from which commit, branch or tag (we will explain later why

we use the CI_TMPL_REF, for now just keep in mind that if it is not defined we will download the version of the

files available on the main branch when the job is executed).CI_TMPL_ASSETS_DIR: Destination of the downloaded files.CI_TMPL_READ_TOKEN: token that includes the read_api scope for the common project, we need it because the

tokens created by the CI/CD pipelines of other projects can t be used to access the api of the common one.We define the variable on the gitlab CI/CD variables section to be able to change it if needed (i.e. if it expires)CI_TMPL_REF: branch or tag of the common repo from which to get the files (we need that to make sure we are using

the right version of the files, i.e. when testing we will use a branch and on production pipelines we can use fixed

tags to make sure that the assets don t change between executions unless we change the reference).We will set the value on the .gitlab-ci.yml file of the remote projects and will use the same reference when including

the files to make sure that everything is coherent.pipeline.yml

include:

- /bootstrap.yaml

stages:

- test

dumb_job:

stage: test

before_script:

- !reference [.bootstrap_ci_templates, create_dumb_sh]

script:

- $ CI_TMPL_ASSETS_DIR /dumb.sh ARG1 ARG2gitlab-ci.yml

include:

- project: 'common/ci-templates'

ref: &ciTmplRef 'main'

file: '/pipeline.yml'

variables:

CI_TMPL_REF: *ciTmplRefCI_TMPL_REF variable (as far as I know we have to pass the ref value explicitly to know which reference was used

when including the file, the anchor allows us to make sure that the value is always the same in both places).

The reference we use is quite important for the reproducibility of the jobs, if we don t use fixed tags or commit

hashes as references each time a job that downloads the files is executed we can get different versions of them.

For that reason is not a bad idea to create tags on our common repo and use them as reference on the projects or

branches that we want to behave as if their CI/CD configuration was local (if we point to a fixed version of the common

repo the way everything is going to work is almost the same as having the pipelines directly in our repo).

But while developing pipelines using branches as references is a really useful option; it allows us to re-run the jobs

that we want to test and they will download the latest versions of the asset files on the branch, speeding up the

testing process.

However keep in mind that the trick only works with the asset files, if we change a job or a pipeline on the YAML

files restarting the job is not enough to test the new version as the restart uses the same job created with the current

pipeline.

To try the updated jobs we have to create a new pipeline using a new action against the repository or executing the

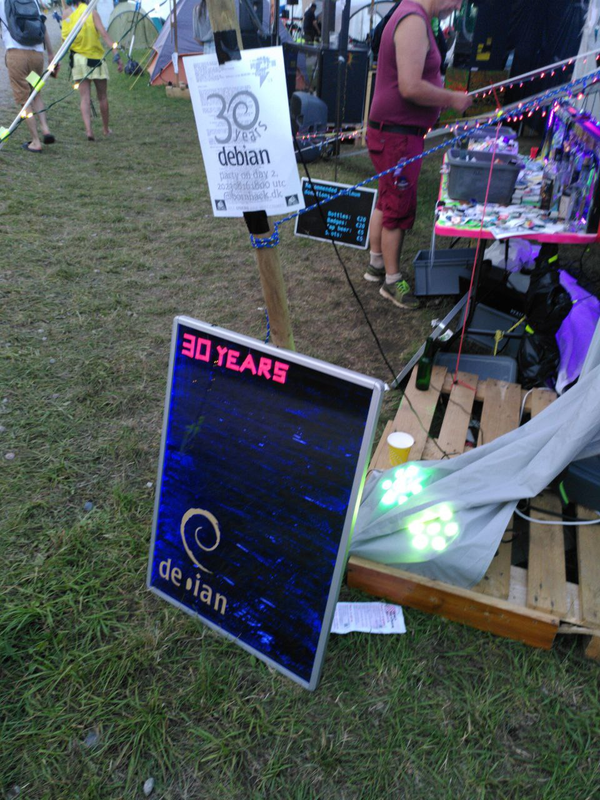

pipeline manually. Debian Celebrates 30 years!

We celebrated our birthday this year and

we had a great time with new friends, new members welcomed to the community,

and the world.

We have collected a few comments, videos, and discussions from

around the Internet, and some images from some of the

DebianDay2023 events. We hope that

you enjoyed the day(s) as much as we did!

Debian Celebrates 30 years!

We celebrated our birthday this year and

we had a great time with new friends, new members welcomed to the community,

and the world.

We have collected a few comments, videos, and discussions from

around the Internet, and some images from some of the

DebianDay2023 events. We hope that

you enjoyed the day(s) as much as we did!

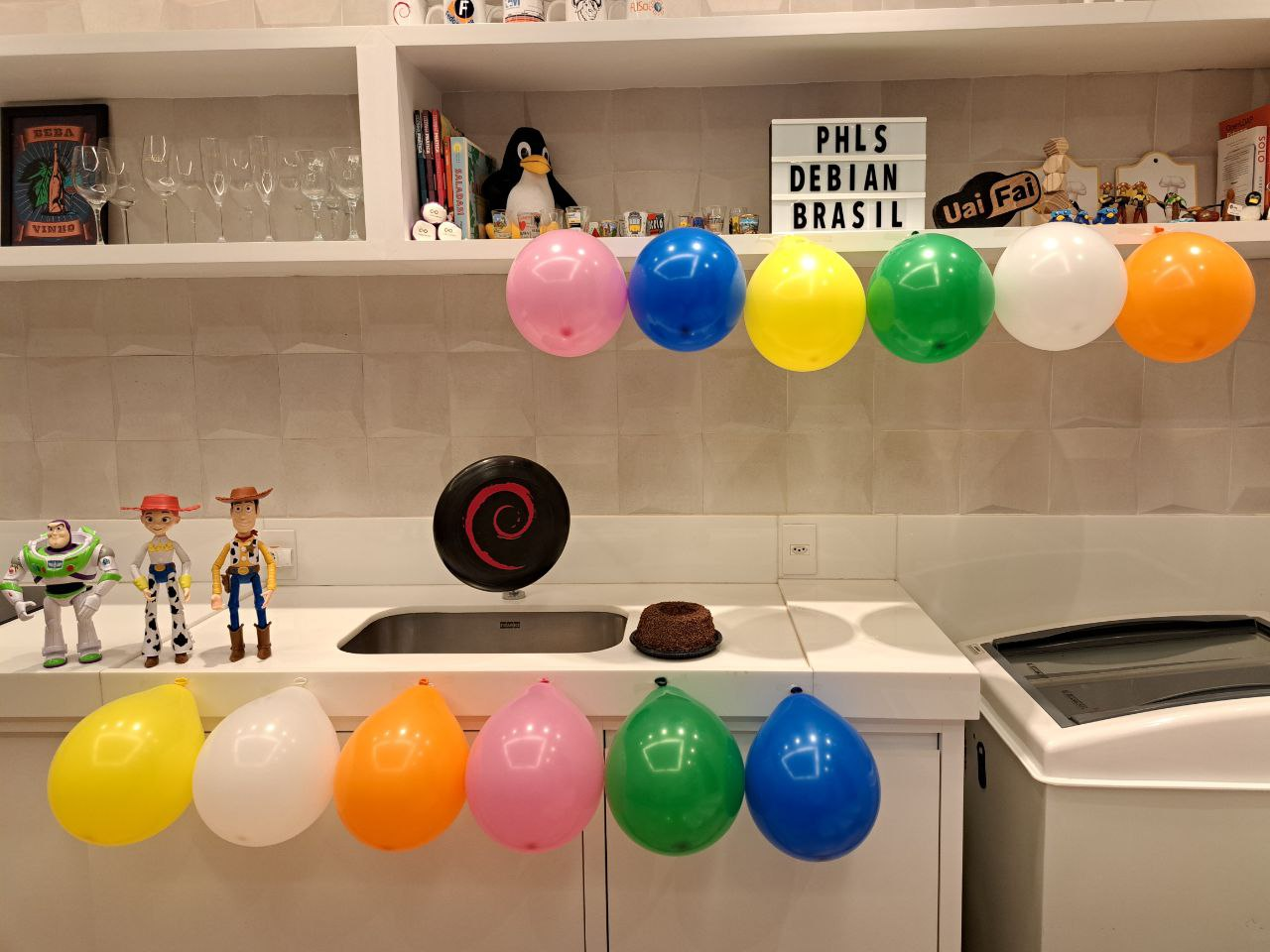

"Debian 30 years of collective intelligence" -Maqsuel Maqson

Brazil

The cake is there. :)

Honorary Debian Developers: Buzz, Jessie, and Woody welcome guests to this amazing party.

Honorary Debian Developers: Buzz, Jessie, and Woody welcome guests to this amazing party.

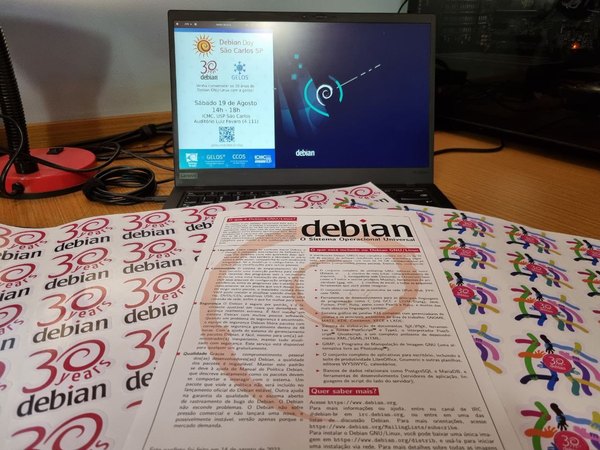

Sao Carlos, state of Sao Paulo, Brazil

Sao Carlos, state of Sao Paulo, Brazil

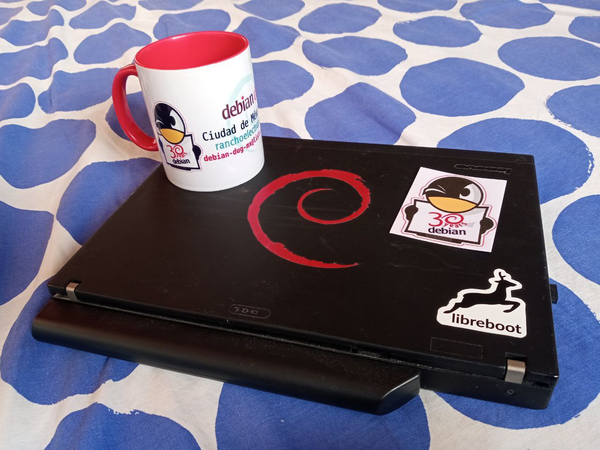

Stickers, and Fliers, and Laptops, oh my!

Stickers, and Fliers, and Laptops, oh my!

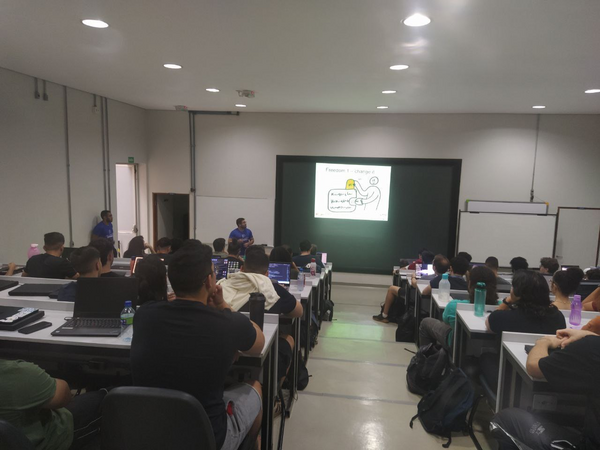

Belo Horizonte, Brazil

Belo Horizonte, Brazil

Bras lia, Brazil

Bras lia, Brazil

Bras lia, Brazil

Mexico

Bras lia, Brazil

Mexico

30 a os!

30 a os!

A quick Selfie

A quick Selfie

We do not encourage beverages on computing hardware, but this one is okay by us.

Germany

We do not encourage beverages on computing hardware, but this one is okay by us.

Germany

The German Delegation is also looking for this dog who footed the bill for the party, then left mysteriously.

We brought the party back inside at CCCamp

Belgium

Cake and Diversity in Belgium

El Salvador

Food and Fellowship in El Salvador

South Africa

Debian is also very delicious!

All smiles waiting to eat the cake Reports Debian Day 30 years in Macei - Brazil Debian Day 30 years in S o Carlos - Brazil Debian Day 30 years in Pouso Alegre - Brazil Debian Day 30 years in Belo Horizonte - Brazil Debian Day 30 years in Curitiba - Brazil Debian Day 30 years in Bras lia - Brazil Debian Day 30 years online in Brazil Articles & Blogs Happy Debian Day - going 30 years strong - Liam Dawe Debian Turns 30 Years Old, Happy Birthday! - Marius Nestor 30 Years of Stability, Security, and Freedom: Celebrating Debian s Birthday - Bobby Borisov Happy 30th Birthday, Debian! - Claudio Kuenzier Debian is 30 and Sgt Pepper Is at Least Ninetysomething - Christine Hall Debian turns 30! -Corbet Thirty years of Debian! - Lennart Hengstmengel Debian marks three decades as 'Universal Operating System' - Sam Varghese Debian Linux Celebrates 30 Years Milestone - Joshua James 30 years on, Debian is at the heart of the world's most successful Linux distros - Liam Proven Looking Back on 30 Years of Debian - Maya Posch Cheers to 30 Years of Debian: A Journey of Open Source Excellence - arindam Discussions and Social Media Debian Celebrates 30 Years - Source: News YCombinator Brand-new Linux release, which I'm calling the Debian ... Source: News YCombinator Comment: Congrats @debian !!! Happy Birthday! Thank you for becoming a cornerstone of the #opensource world. Here's to decades of collaboration, stability & #software #freedom -openSUSELinux via X (formerly Twitter) Comment: Today we #celebrate the 30th birthday of #Debian, one of the largest and most important cornerstones of the #opensourcecommunity. For this we would like to thank you very much and wish you the best for the next 30 years! Source: X (Formerly Twitter -TUXEDOComputers via X (formerly Twitter) Happy Debian Day! - Source: Reddit.com Video The History of Debian The Beginning - Source: Linux User Space Debian Celebrates 30 years -Source: Lobste.rs Video Debian At 30 and No More Distro Hopping! - LWDW388 - Source: LinuxGameCast Debian Celebrates 30 years! - Source: Debian User Forums Debian Celebrates 30 years! - Source: Linux.org

I discovered Gazelle Twin last year via Stuart

Maconie's Freak Zone (two of her

tracks ended up on my 2022 Halloween playlist).

Through her website I learned of The Horror Show!

exhibition at Somerset House1 in London that I managed to visit

earlier this year.

I've been intending to write a 5-track blog post (a la

Underworld, the Cure, Coil) for a while

but I have been spurred on by the excellent news that she's got a new album on

the way, and, she's performing at the Sage Gateshead in

November. Buy tickets

now!!

Here's the five tracks I recommend to get started:

I discovered Gazelle Twin last year via Stuart

Maconie's Freak Zone (two of her

tracks ended up on my 2022 Halloween playlist).

Through her website I learned of The Horror Show!

exhibition at Somerset House1 in London that I managed to visit

earlier this year.

I've been intending to write a 5-track blog post (a la

Underworld, the Cure, Coil) for a while

but I have been spurred on by the excellent news that she's got a new album on

the way, and, she's performing at the Sage Gateshead in

November. Buy tickets

now!!

Here's the five tracks I recommend to get started:

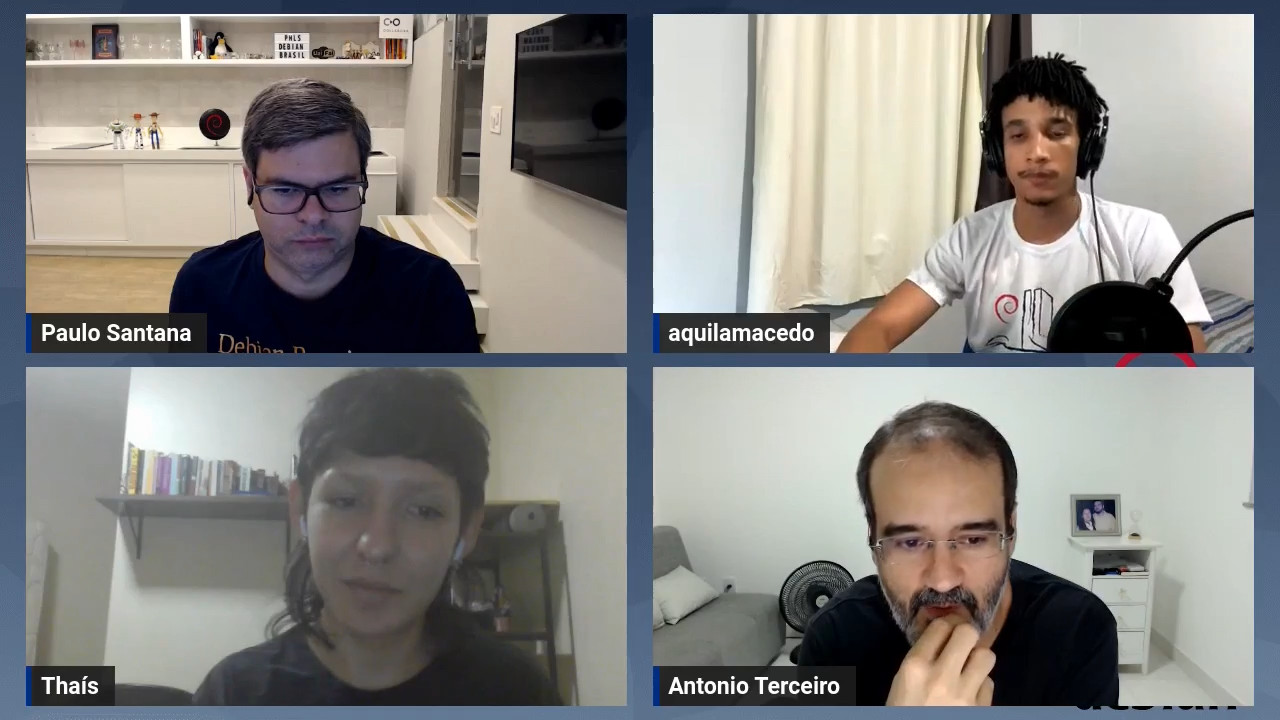

Em 2023 o tradicional Debian Day est

sendo celebrado de forma especial, afinal no dia 16 de agostoo Debian completou

30 anos!

Para comemorar este marco especial na vida do Debian, a

comunidade Debian Brasil organizou uma semana

de palestras online de 14 a 18 de agosto. O evento foi chamado de

Debian 30 anos.

Foram realizadas 2 palestras por noite, das 19h s 22h, transmitidas pelo canal

Debian Brasil no YouTube

totalizando 10 palestras. As grava es j est o dispon veis tamb m no canal

Debian Brasil no Peertube.

Nas 10 atividades tivemos as participa es de 9 DDs, 1 DM, 3 contribuidores(as).

A audi ncia ao vivo variou bastante, e o pico foi na palestra sobre preseed com

o Eriberto Mota quando tivemos 47 pessoas assistindo.

Obrigado a todos(as) participantes pela contribui o que voc s deram para o

sucesso do nosso evento.

Em 2023 o tradicional Debian Day est

sendo celebrado de forma especial, afinal no dia 16 de agostoo Debian completou

30 anos!

Para comemorar este marco especial na vida do Debian, a

comunidade Debian Brasil organizou uma semana

de palestras online de 14 a 18 de agosto. O evento foi chamado de

Debian 30 anos.

Foram realizadas 2 palestras por noite, das 19h s 22h, transmitidas pelo canal

Debian Brasil no YouTube

totalizando 10 palestras. As grava es j est o dispon veis tamb m no canal

Debian Brasil no Peertube.

Nas 10 atividades tivemos as participa es de 9 DDs, 1 DM, 3 contribuidores(as).

A audi ncia ao vivo variou bastante, e o pico foi na palestra sobre preseed com

o Eriberto Mota quando tivemos 47 pessoas assistindo.

Obrigado a todos(as) participantes pela contribui o que voc s deram para o

sucesso do nosso evento.

In 2023 the traditional Debian Day is

being celebrated in a special way, after all on August 16th Debian turned 30

years old!

To celebrate this special milestone in the Debian's life, the

Debian Brasil community organized a week with

talks online from August 14th to 18th. The event was named

Debian 30 years.

Two talks were held per night, from 7:00 pm to 10:00 pm, streamed on the

Debian Brasil channel on YouTube

totaling 10 talks. The recordings are also available on the

Debian Brazil channel on Peertube.

We had the participation of 9 DDs, 1 DM, 3 contributors in 10 activities.

The live audience varied a lot, and the peak was on the preseed talk with

Eriberto Mota when we had 47 people watching.

Thank you to all participants for the contribution you made to the success of

our event.

In 2023 the traditional Debian Day is

being celebrated in a special way, after all on August 16th Debian turned 30

years old!

To celebrate this special milestone in the Debian's life, the

Debian Brasil community organized a week with

talks online from August 14th to 18th. The event was named

Debian 30 years.

Two talks were held per night, from 7:00 pm to 10:00 pm, streamed on the

Debian Brasil channel on YouTube

totaling 10 talks. The recordings are also available on the

Debian Brazil channel on Peertube.

We had the participation of 9 DDs, 1 DM, 3 contributors in 10 activities.

The live audience varied a lot, and the peak was on the preseed talk with

Eriberto Mota when we had 47 people watching.

Thank you to all participants for the contribution you made to the success of

our event.

For the first time, the city of Belo Horizonte held a

Debian Day to celebrate the

anniversary of the Debian Project.

The communities Debian Minas Gerais

and Free Software Belo Horizonte and Region

felt motivated to celebrate this special date due the 30 years of the Debian

Project in 2023 and they organized a meeting on August 12nd in

UFMG Knowledge Space.

The Debian Day organization in Belo Horizonte received the important support

from UFMG Computer Science Department to book the

room used by the event.

It was scheduled three activities:

For the first time, the city of Belo Horizonte held a

Debian Day to celebrate the

anniversary of the Debian Project.

The communities Debian Minas Gerais

and Free Software Belo Horizonte and Region

felt motivated to celebrate this special date due the 30 years of the Debian

Project in 2023 and they organized a meeting on August 12nd in

UFMG Knowledge Space.

The Debian Day organization in Belo Horizonte received the important support

from UFMG Computer Science Department to book the

room used by the event.

It was scheduled three activities:

by Thiago Pezzo,

Debian contributor, pt_BR localization team

This year's Debian Day was a pretty special one, we are celebrating 30 years!

Giving the importance of this event, the Brazilian community planned a very

special week. Instead of only local gatherings, we had a week of online talks

streamed via Debian Brazil's youtube channel

(soon the recordings will be uploaded to our team's

peertube instance).

Nonetheless the local celebrations happened around the country and one was

organized in Pouso Alegre, MG, Brazil,

at the Instituto Federal de Educa o, Ci ncia e Tecnologia do Sul de Minas Gerais

(IFSULDEMINAS - Federal Institute of Education, Science and Technology of the

South of Minas Gerais). The Institute, as many of its counterparts in Brazil,

specializes in professional and technological curricula to high school and

undergraduate levels. All public, free and quality education!

The event happened on the afternoon of August 16th at the Pouso Alegre campus.

Some 30 students from the High School Computer Technician class attended the

presentation about the Debian Project and the Free

Software movement in general. Everyone had a great time! And afterwards we

had some spare time to chat.

I would like to thank all people who helped us:

by Thiago Pezzo,

Debian contributor, pt_BR localization team

This year's Debian Day was a pretty special one, we are celebrating 30 years!

Giving the importance of this event, the Brazilian community planned a very

special week. Instead of only local gatherings, we had a week of online talks

streamed via Debian Brazil's youtube channel

(soon the recordings will be uploaded to our team's

peertube instance).

Nonetheless the local celebrations happened around the country and one was

organized in Pouso Alegre, MG, Brazil,

at the Instituto Federal de Educa o, Ci ncia e Tecnologia do Sul de Minas Gerais

(IFSULDEMINAS - Federal Institute of Education, Science and Technology of the

South of Minas Gerais). The Institute, as many of its counterparts in Brazil,

specializes in professional and technological curricula to high school and

undergraduate levels. All public, free and quality education!

The event happened on the afternoon of August 16th at the Pouso Alegre campus.

Some 30 students from the High School Computer Technician class attended the

presentation about the Debian Project and the Free

Software movement in general. Everyone had a great time! And afterwards we

had some spare time to chat.

I would like to thank all people who helped us:

I designed and printed a "terrain" base for my 3D castle in

OpenSCAD. The castle was the first thing I designed and printed on our (then

new) office 3D printer. I use it as a test bed if I want to try something new,

and this time I wanted to try procedurally generating a model.

I've released the OpenSCAD source for the terrain generator under the

name Zarchscape.

mid 90s terrain generation

I designed and printed a "terrain" base for my 3D castle in

OpenSCAD. The castle was the first thing I designed and printed on our (then

new) office 3D printer. I use it as a test bed if I want to try something new,

and this time I wanted to try procedurally generating a model.

I've released the OpenSCAD source for the terrain generator under the

name Zarchscape.

mid 90s terrain generation

](https://jmtd.net/log/zarchscape/300x-carpet_90s.jpg) Terrain generation, 90s-style. From this article

Terrain generation, 90s-style. From this article

I dug out a computer running Fedora 28, which was released 2018-04-01 - over 5 years ago. Backing up the data and re-installing seemed tedious, but the current version of Fedora is 38, and while Fedora supports updates from N to N+2 that was still going to be 5 separate upgrades. That seemed tedious, so I figured I'd just try to do an update from 28 directly to 38. This is, obviously, extremely unsupported, but what could possibly go wrong?

I dug out a computer running Fedora 28, which was released 2018-04-01 - over 5 years ago. Backing up the data and re-installing seemed tedious, but the current version of Fedora is 38, and while Fedora supports updates from N to N+2 that was still going to be 5 separate upgrades. That seemed tedious, so I figured I'd just try to do an update from 28 directly to 38. This is, obviously, extremely unsupported, but what could possibly go wrong?sudo dnf system-upgrade download --releasever=38 didn't successfully resolve dependencies, but sudo dnf system-upgrade download --releasever=38 --allowerasing passed and dnf started downloading 6GB of packages. And then promptly failed, since I didn't have any of the relevant signing keys. So I downloaded the fedora-gpg-keys package from F38 by hand and tried to install it, and got a signature hdr data: BAD, no. of bytes(88084) out of range error. It turns out that rpm doesn't handle cases where the signature header is larger than a few K, and RPMs from modern versions of Fedora. The obvious fix would be to install a newer version of rpm, but that wouldn't be easy without upgrading the rest of the system as well - or, alternatively, downloading a bunch of build depends and building it. Given that I'm already doing all of this in the worst way possible, let's do something different.

int32_t il_max = HEADER_TAGS_MAX;

int32_t dl_max = HEADER_DATA_MAX;

if (regionTag == RPMTAG_HEADERSIGNATURES)

il_max = 32;

dl_max = 8192;

disassemble hdrblobRead. The relevant chunk ends up being:

0x000000000001bc81 <+81>: cmp $0x3e,%ebx

0x000000000001bc84 <+84>: mov $0xfffffff,%ecx

0x000000000001bc89 <+89>: mov $0x2000,%eax

0x000000000001bc8e <+94>: mov %r12,%rdi

0x000000000001bc91 <+97>: cmovne %ecx,%eax

if (regionTag == RPMTAG_HEADERSIGNATURES) code and so using the default limits even if the header section in question is the signatures. And with that one byte modification, rpm from F28 would suddenly install the fedora-gpg-keys package from F38. Success!

Next.